Content

Content is ideas, facts, and information, designed to entertain, enlighten and engage the consumer. It is created to achieve desired outcomes and published in easily shareable formats including video, audio, text, and infographics, packaged as books, white papers, blog posts, slides, or massive open online courses better known by the abbreviation MOOCs.

Content is mostly created by users or produced by professionals. Content is mostly created manually and amplified on social media and websites by sharing manually or programmatically. A third category of content is programatic content generation or content generated by machine.

- User Generated Content (UGC) is produced to express themselves, share opinions, and to achieve recognition, notoriety or influence, usually without expectation of compensation and profit.

Examples of user-generated content include: Loom videos and Facebook raw videos. - Professionally generated content is created by experts and professionals.

News and digital media organizations, artists, writers, and bloggers create professional content.

Businesses create content for marketing, support, public relations, and for advertising of products and services.

Businesses also create financial, investment and legal content for stakeholders.

Governments and libraries create statistical, legal and regulatory content.

Academic institutions create both privately, and publically accessible content for educational purposes.

- Machine Generated Content is produced programmatically by machines with help from humans.

Machine Generated Content

Programmatically or Machine Generated Content is produced programmatically by machines under varying levels of direction from humans. Rules-based report writers and content generation have existed for years, but creating content using artificial intelligence is getting mainstream adoption.

We will think of content as generated language, generated or pre-recorded music, generated audio using text-to-speech, and generated video.

Natural Language Generation

Natural Language Generation (NLG) is the task of producing human-understandable language from a data representation. It could be straightforward (e.g., replacing variables with names in a sentence), business rules and template-based, based on artificial neural networks analyzing human-written text, or a combination

Narrative Science, Yseop, Arria, and AX Semantics have focused on generating natural language from data. Crystal and Phrasee create personalized emails based on personality derived from user’s online profiles. Associated Press has been automatically creating thousands of public company earnings reports using NLG and templates using the Automated Insights platform with data from Zacks Investment Research.

There are many more use cases where NLG is useful in generating text (along with video and infographics) from data. Examples include:

- Creating descriptions of products from catalog information for electronics, shoes, homes for sale or anything

- Creating individualized communications with customers, e.g., a quarterly summary of expenses

- Finding anomalies in data analysis and generating notifications

- Creating responses to chat conversations by a bot

- AI-driven digital assistants utilize Natural Language Understanding (NLU) for reading and understanding user requests and NLG for formulating responses

- Enabling Mass personalization based on user profile data

Image Generation and Regeneration

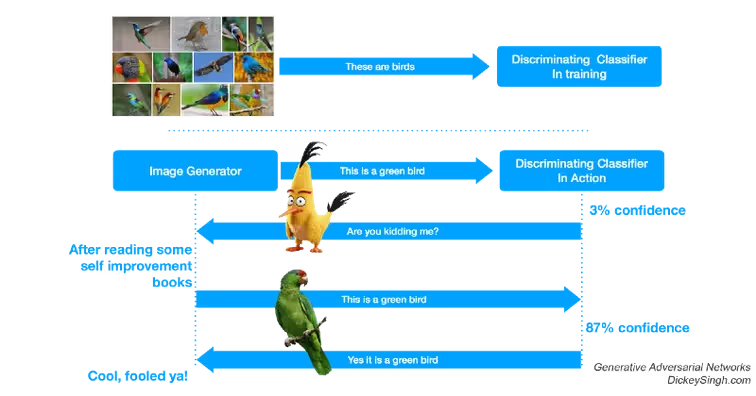

Generative Adversarial Networks (GANs) can help in creating synthetic images from text descriptions (another research paper), regenerating higher resolution versions of images (Google), generating realistic versions of images from synthetic images (Apple won an award for this paper), or generating the next video frame based on previous video frames.

GANs use deep neural networks, and consist of a discriminating image classifier “D” that classifies if an image is good enough or not, and a generating model “G” that creates better images in response. The discriminator and generator compete to produce improved image generation. The Discriminator is initially trained with real-world samples before it is paired with the Generator in an unsupervised setting. Feedback from Discriminator improves Generator.

Video and Audio Generation and Regeneration

Here are some examples of video and audio generation and regeneration.

Wibbitz helps you create engaging videos for sharing on social media directly from textual content.

Sezion can generate personalized videos from data. For example, it can produce a video for every product on Nike’s online catalog based on the information, images, descriptions, and prices.

Lyrebird can create audio content that can mimic a person by learning from a person’s voice sample. Started at the Montréal Institute of Learning Algorithms, the underlying technology is principally based on deep neural networks.

Facial re-enactment technology has separately been applied to face recognition and manipulation as well at Stanford. The program can manipulate facial expressions of people in youtube videos in real-time.

In 2015, IBM Watson used artificial intelligence to assist in generating a movie trailer for sci-fi horror film “Morgan,” by analyzing video, audio, and screen composition. Hundreds of movies and movie trailers of horror and thrillers were used to train the AI program. The program watched Morgan and created ten points of interest for use in the film trailer.

Brain.fm is an AI music composer, specifically for focusing, relaxing, meditating or sleeping. You can explore numerous styles of music including ambient music for relaxing, or thunder music for focusing. JukeDeck also uses AI to compose music.

The music and video art for this new popular song were generated using AI. The lyrics and vocal melody are by Taryn Southern, and music and video art were made with Amper Music’s artificial intelligence.

Text Regeneration: Extractive Summarization

Watching a movie, extracting scenes, and compiling a trailer is extractive video summarization. Significant effort is spent in selecting key scenes to extract.

In extractive summarization, the system extracts sentences, images, or scenes from a document, image collection, or video respectively without modifying the original content.

There are many extractive video summarization research papers, one using Long Short Term Memory (LSTM), another using rank-based approach, and another using deep neural networks.

Text Regeneration: Abstractive Summarization

Abstraction can summarize text more strongly than extraction, via a combination of Natural Language Understanding (NLU) and Natural Language Generation (NLG). Abstractive Summarization understands and paraphrases text.

TensorFlow has been used to create headlines from documents automatically using deep learning sequence-to-sequence learning (using two Long Short-Term Memory in series) technique. It works well for short documents.

Salesforce used reinforcement deep learning for summarizing documents to produce a “TL;DR” by training with numerous documents and human-provided summaries. Recurrent Neural Networks based abstractive summarization produce a good performance with short documents. Salesforce used standard supervised word prediction and reinforcement learning (RL) to produce readable summaries with a 41.16 ROUGE-1 score, a 23% improvement over previous neural text summarization techniques (see research paper).

Here is an attempt to compare text summarization methods LexRank, LSA, Luhn and TextRank, and TensorFlow’s Sequence-to-Sequence with Attention Model for Text Summarization algorithm.

Similar to how abstractive text summarization requires decoders (Natural Language Understanding) and encoders (Natural Language Generation), Video and Music needs to be understood before it can be summarized using abstractive techniques. Video transcript natural language understanding, video analysis, scene composition analysis, audio analysis, object detection may be used for fully understanding videos. Summarization output could be textual, synthesized audio, or another video generated using a hybrid approach of extractive and abstractive summarization techniques.

Audio and Video Generation

Ability to generate or summarize stories, music, infographics, and video using AI is powerful.

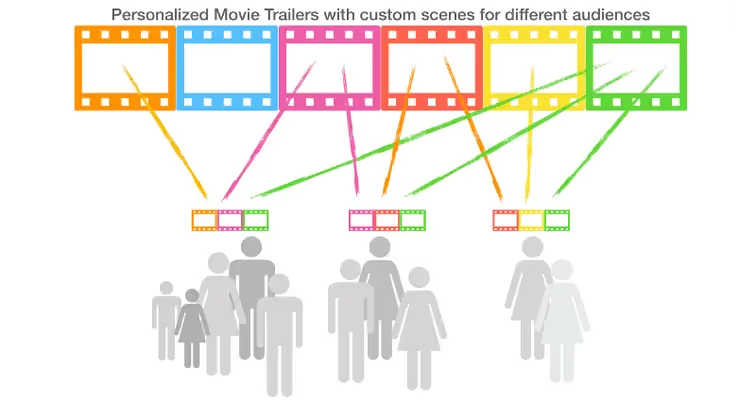

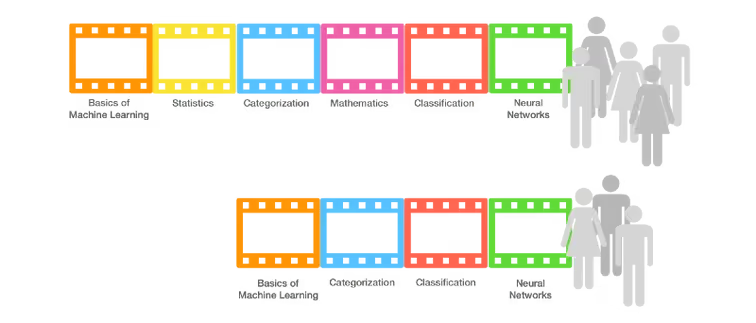

Consumers have varying interests and predilections, the same content usually does not appeal to everyone. Marketers have long personalized content and interactions with consumers using various segmentation techniques to achieve higher levels of engagement, retention, and revenue.

Films, audio music, articles, graphics, and stories can similarly be custom generated based on who they are being created for, where they live, where they are from, what are their values, interests, opinions, and lifestyles are, what outcomes you desire from them, how they behave and interact with various products and services, and what their attention span is for content types.

Technologies like Facial re-enactment technology, voice imitation, and video regeneration could be used to create summarized videos.

Deep Personalization and audio-video content

With the ability to generate and regenerate content using artificial intelligence, we have the ability to create personalized summaries, trailers, headings, images, infographics for everyone.

People who like car chase scenes and action stunts in movies could be shown a trailer custom suited for them. In fact, extractive summarization could personalize video for different audiences by picking scenes that would make the film trailer more attractive to them.

Technical readers can be shown a technical summary of a paper, and business managers can be shown a business summary. Headings can be rewritten for different audiences for maximum click-throughs. Audiobooks can be summarized with personalized content for different audiences.

Imagine a course custom produced for students. Students who took statistics and mathematics in college could be served a different study video compared to students who did not. Courses would have higher retention, engagement, and value to students.

Personalized content generation can make content more engaging, enlightening, and entertaining to more audiences. AI can help for all types of content including text, video, and audio.

If personalization is what customized content people see, deep personalization is the ability to individualize rich content, i.e., music, videos, stories, slides, infographics for each user, or clusters of like users.