Acknowledgment: Thanks to Google Cloud and AWS for $265,000 of credits that made this deep dive possible.

Besides automatically learning from your CRM, customer success platforms, data sources, and even your products, Cast.app AI Customer Success Managers (AI CSMs) also explicitly learn from difficult, real-world customer scenarios.

We regularly ask our customers’ human CSMs to share the toughest customer questions—especially those requiring significant research or complex analysis. This additional explicit training uniquely equips Cast.app’s AI CSMs to answer questions immediately, saving human CSMs several hours of valuable time.

Consulting directly with customer-facing teams works well when the number of CSMs, account managers, onboarding specialists, and renewal experts is manageable. But for organizations with hundreds of customer-facing team members, this method quickly becomes impractical, particularly for a company centered around AI automation like Cast.app.

To overcome this challenge, we partnered with a large customer who provided access to 750 anonymized Chorus and Gong recordings of real customer conversations. Our deep analysis of these recordings not only surfaced the challenging questions customers asked the teams — they also revealed additional, eye-opening insights, significantly enhancing Cast.app’s AI CSM capabilities.

Thanks to our customer for sharing nearly 700 Chorus and Gong recordings.

Large companies own tons of data including recordings between customer-facing teams and customers and AI is data hungry for such data. It is surprising how data from such customers can drastically help us improve our product for all our customers.

Our contract prohibits sharing much but we are grateful to them for sharing the recordings. Please note, we are sharing a subset of approved findings below.

All recordings were of customer success, outcomes management, business reviews, renewal conversations, account-management, expansion calls, or onboarding team-members at our customers talking to their customer. We did not receive any new sales recordings, nor pre boarding recordings as the later falls under sales.

SDRs (Sales Development Reps), Support Reps, and AEs (Account Executives) typically have clearly defined roles: SDRs focus on lead generation, Support Reps handle customer issues using predefined solutions, and AEs close deals by following established sales playbooks.

In contrast, Customer Success Managers (CSMs) handle many varied responsibilities throughout the entire customer lifecycle—including onboarding new customers, driving adoption, managing renewals, education, facilitating upsells and cross-sells by finding leads, and ensuring customers achieve desired outcomes.

Precisely because of this complexity, building an effective AI CSM is significantly more challenging than developing AI SDRs, AI Support Reps, or AI AEs.

If you are new to the power of AI CSMs, see an executive summary here.

Here are some additional links: a) use cases, b) how AI CSMs work behind the scenes, c) data-driven infographics, d) embedding videos including AI generated, e) advanced ROI use cases, etc.

The higher expectations placed on AI CSMs come with powerful benefits:

Again, AI CSMs don’t just help you scale to 100% of your accounts across all segments—they also deliver personalized scaling for every customer stakeholder.

Imagine having 2,500 customer accounts, each with roughly 10 customer contacts you regularly engage—including individual contributors, executives, and even decision-makers outside the primary line of business, such as CFOs. With Cast.app, you can instantly deploy 25,000 AI CSMs, assigning a dedicated AI CSM to each individual customer contact within seconds.

Both Gong and Chorus conversational intelligence platforms already provide some analytics and alerting.

They are focused on new sales, as evidenced by the stats they provide, namely: customer vs salesperson talk-time ratios, speaker insights & overlaps, question & interruption counts, topic detection, keyword mentions, flagging risky deals, sales-rep performance and coaching, basic sentiment, etc.

We also incorporated a tweaked version of the included analytics in our AI CSM training. Here is what we also included.

Talk-Time Ratios: See if the CSM is giving the customer enough “space” to share concerns, feedback, or expansion opportunities. We reduced the weightage of Talk-Time Ratios, as these conversations were not sales discovery calls.

Engagement Insights: Monitor how much customers participate in QBRs (Quarterly Business Reviews) and strategic planning sessions. If the customer is silent or disengaged, that may signal retention risk.

Question & Interruption Counts: They track how many questions the customer and CSM asks and note moments of interruption, revealing how smoothly the conversation flows.

Renewals & Expansion: Track terms like “renewal,” “upgrade,” or “additional seats.” Helps identify upsell opportunities and plan expansions.

Product Feedback: Spot recurring issues or requests (e.g., “bug,” “integration,” “feature request”) so that CSMs can escalate them appropriately.

Health & Satisfaction Indicators: Look for mention of words like “ROI,” “value,” “support issues,” or “adoption challenges” to proactively address churn risks.

Competitor Mentions: Capture keywords like competitor names

Partner Mentions: Capture keywords like partners names that you work with or offer.

Renewal Pipeline: Treat renewals or expansions as part of a “pipeline.” Use similar analytics (e.g., risk signals if key topics go unmentioned or the main champion isn’t on calls).

Churn Risk Indicators: Flag accounts in danger if certain issues arise repeatedly without clear resolution or if the decision-makers rarely attend calls.

CSM Best Practices: Identify top-performing CSMs’ calls—how they navigate difficult support or renewal conversations, how they drive adoption, and how they surface expansion opportunities.

Team-Wide Training: Help CSMs improve communication styles or conflict resolution techniques, especially if issues come up repeatedly.

We did a deep analysis on top of the recordings and analytics provided by the platforms to train our AI Customer Success Managers (AI CSMs) and AI Customer Experience Managers (AI CXMs) to broadly include pre-boarding late-stage prospects, onboarding new customer accounts, onboarding users and executives throughout the lifecycle, customer experience, and customer success and account management conversations including driving usage, adoption, renewals, expansion, outcomes, sentiment-driven realtime actions, and referrals.

Not into the technical details? Feel free to skip ahead to the next section—this part is for those curious about the tools and methods behind the deep analysis.

In addition to the Conversational Intelligence analytics provided by Gong and Chorus, we also used the following technology.

Chorus recordings were transcribed using Deepgram. Deepgram’s ability to handle conversational audio makes it ideal for scenarios where multiple speakers and overlapping dialogue occur.

Why? Deepgram delivers fast, accurate, and scalable transcription, optimized for conversational audio like customer meetings.

Relevance: Enabled efficient processing of a large volume of recordings with high accuracy—essential for downstream analysis like sentiment detection and engagement scoring.

Advantage: Offers enterprise-grade performance with flexible deployment and pricing options, making it well-suited for batch transcription without the need for real-time processing.

The recordings were transcribed to text using Whisper, as we the transcriptions provided were missing the context we needed.

Why? Whisper is an open-source automatic speech recognition (ASR) system developed by OpenAI. It was chosen for its high transcription accuracy, support for multiple accents, and affordable cost at scale.

Relevance: High-quality transcription is foundational for downstream tasks like sentiment analysis, clustering, and engagement scoring.

Advantage: Unlike real-time ASR solutions, real-time processing was not required in this use case, making Whisper a cost-effective and accurate option for batch transcription.

Used to extract non-verbal cues such as voice tone, pace, volume, and calmness from the original recordings.

Why? Non-verbal signals are often more telling than words in customer interactions, revealing confidence, hesitation, or urgency.

Relevance: Enabled deeper understanding of CSM engagement, customer frustration, or power dynamics—especially in meetings where tone shifted based on executive presence.

Advantage: Augments text-based analysis with emotional and behavioral indicators, giving a more complete picture of the interaction quality and engagement level.

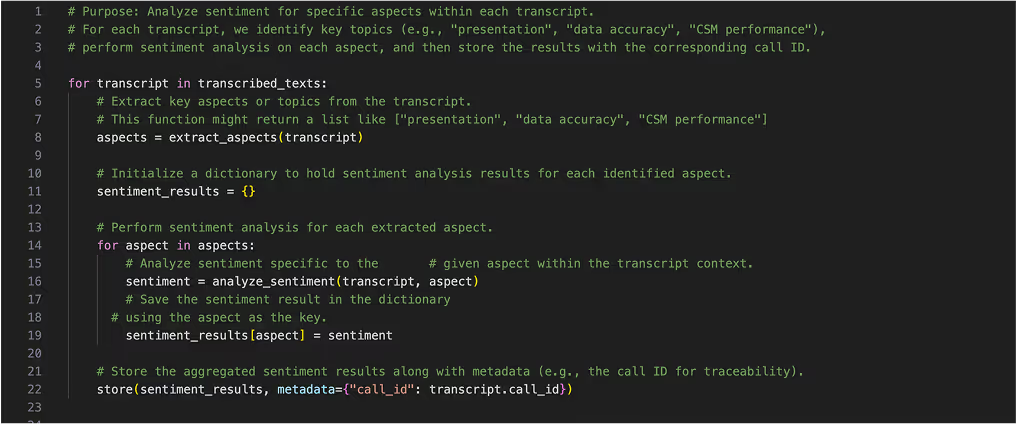

ABSA was applied to identify sentiment tied to specific topics or aspects within the conversation.

Why? Unlike general sentiment classification (positive/negative), ABSA detects how customers feel about individual elements like the product, data accuracy, presentation style, or the CSM’s responsiveness.

Relevance: Enabled analysis such as customers expressing frustration with outdated messaging but positive sentiment toward real-life examples.

Advantage: Provides granular, actionable insights by tying sentiment directly to what was being discussed—not just the overall mood of the call

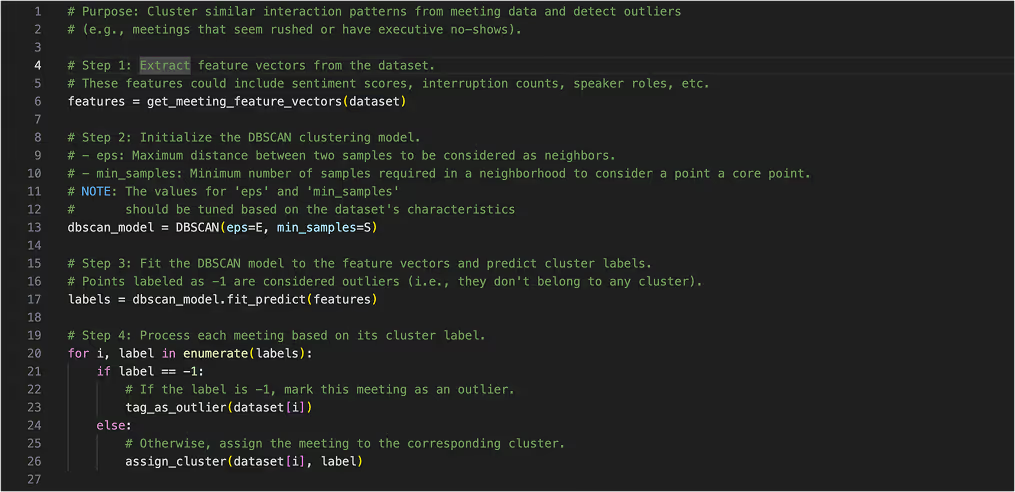

Why? DBSCAN is excellent at identifying patterns where interactions have varying densities, such as when CSM engagement levels fluctuate depending on the presence of executives or the format of the meeting (1:1 vs. group).

Relevance: It effectively detects outliers—such as customer executive no-shows or situations where CSMs were frequently asked to “email the presentation” instead of presenting.

Advantage: Unlike K-means, DBSCAN does not require the number of clusters to be predefined, making it better suited for real-world conversational data where interaction structures naturally vary.

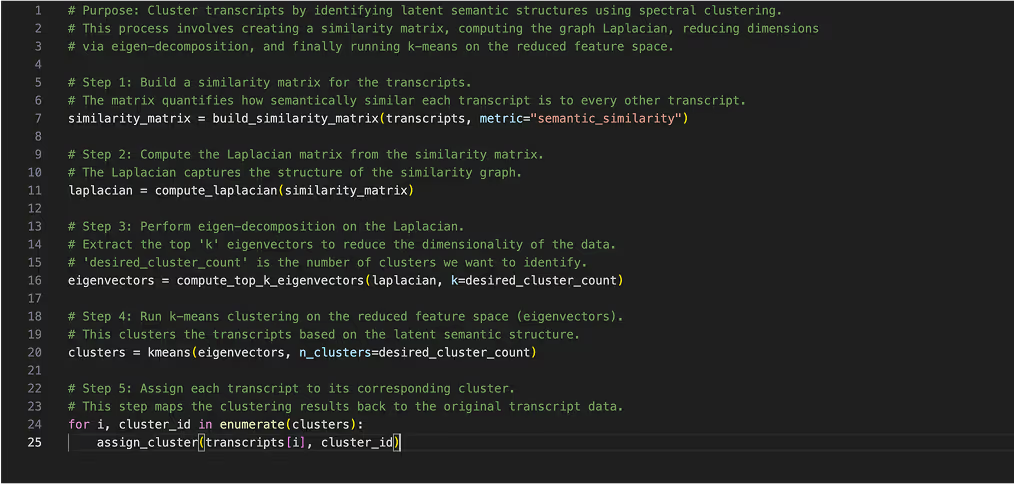

Why? Spectral Clustering leverages a similarity matrix, making it well-suited for high-dimensional conversational data, such as tone, engagement levels, and dialogue interruptions. Spectral clustering is particularly useful when the data does not naturally form spherical clusters, which is common in complex conversational data.

Relevance: Useful for grouping interactions based on latent conversational structures, such as when customers interrupt on slides 4-6, or when CSMs give vague responses like “let me get back to you.”

Advantage: It works well when traditional distance-based methods (like K-means) fail due to the complex nature of human interactions.

Both DBSCAN and Spectral Clustering handle irregular conversational patterns, engagement variations, and meeting dynamics better than K-means.

DBSCAN captures outliers, while Spectral Clustering identifies hidden relationship structures in interactions.

They don’t assume clusters are spherical, making them ideal for unstructured customer conversations.

We’re sharing a small subset of selected insights from the 124+ learnings we’ve uncovered to train AI CSMs. The ones highlighted here were specifically chosen because they’re highly relevant to B2B businesses—and are valuable even if your business hasn’t adopted Cast AI CSMs yet.

In 68% of meetings, CSMs responded at least once with “Let me get back to you,” which hurt trust, delayed customer decision-making and flow, and further reduced urgency.

CSMs frequently deferred providing immediate answers, which slowed decision-making and diminished customer confidence. This pattern of response was observed in 68% of meetings, eroding trust and urgency.

CSMs often have to look up information from on average 3 systems or consult a technical subject matter expert, before they answer questions.

AI CSMs can easily answer questions by querying across systems in a fraction of time and in realtime.

One-on-one conversations between a CSM and a specific user at the customer were significantly more engaging and productive compared to a CSM presenting to several people at Customer.

Smaller, focused conversations allowed for tailored discussions that resulted in higher engagement and more valuable insights. Customers asked more relevant questions and received personalized attention, making these sessions far more effective than group presentations.

Every individual contributor, executive, or decision-maker may have different priorities.

No-shows by customer executives in group meetings weakened the impact and hindered timely decision-making.

When key customer executives were absent, the strategic depth of the discussions suffered, delaying follow-ups and actionable decisions. Their no-shows often left the meetings directionless, reducing overall impact.

Customers were more attentive and respectful toward CSMs when a vendor executive was present.

The presence of a vendor executive, such as a CSM’s supervisor or executive, elevated the perceived importance of the meeting and increased customer engagement. Customers demonstrated higher attentiveness and respect, leading to more structured and impactful interactions.

Customers typically began asking questions around 15% into the conversation—usually the 4th slide—indicating a spike in engagement.

Engagement levels noticeably increased around the fourth slide, suggesting that initial slides serve as an introduction while the core content drives active participation. This trend highlights a natural inflection point where customers become more involved.

Customers frequently questioned data accuracy and CSM responses, eroding trust.

Many customers challenged the reliability of the data and CSM answers, leading to doubts about the insights and recommendations provided by the CSMs. This persistent skepticism undermined trust and reduced the overall credibility of the presentations.

Surprisingly, stories and examples proved to be as persuasive as customer-specific data in upselling and cross-selling.

Even in the absence of detailed, customer-specific data, well-crafted stories, case studies, and examples effectively conveyed value and drove sales opportunities. This finding demonstrates that narrative-driven approaches can be just as compelling in influencing customer decisions.

This was surprising because many companies still claim a lack of data as a reason for not being ready to implement automation.

Even two months after leadership updated messaging or direction, CSMs continued to present outdated messaging.

Despite receiving updated messaging from leadership, many CSMs were still using old materials well after the update, creating inconsistency. This lag not only confused customers but also made the CSMs appear out of sync with current strategic priorities.

CSM's leaders expressed significant concern over the continued use of outdated content, slides, and messaging by CSMs.

AI CSMs can quickly adapt to new messaging, ensuring alignment with the latest strategic priorities.

Customers preferred direct access to subject matter experts over engaging solely with CSMs.

Customers often bypassed CSMs, perceiving them as less technically proficient, and instead requested direct connections with experts. This preference highlights the need for a more integrated approach that includes both CSMs and subject matter experts in customer engagements.

Interestingly, Account Managers and Customer Success Managers rushed to complete "commission-generating tasks" even before key intermediary requirements were met.

It was observed that tasks tied to commissions were finalized ahead of essential intermediary steps, indicating a possible misalignment in prioritization. This trend raises questions about the processes and incentives that drive these interactions.

There are several other learnings that may apply to you. If you are interested in learning more, contact us below.

Disclaimers

Four VP and C-level executives I shared this with suggested adding a disclaimer about the learnings.

The insights and learnings presented in this article are based on a deep analysis of customer interactions from specific customer scenarios. Findings may vary significantly across different companies, industries, and use cases. Therefore, these insights may not necessarily apply to your business context. We encourage evaluating the relevance of these insights based on your organization’s specific needs, customer interactions, and operational realities.